Health

Emotional Connection Key to Effective AI Therapy, Study Finds

Research from the University of Sussex has revealed that mental health chatbots are most effective when users establish an emotional connection with their AI therapists. Published in the journal Social Science & Medicine, the study highlights the significance of “synthetic intimacy” in enhancing the efficacy of chatbot therapy, which is increasingly being utilized across the U.K..

With over one in three residents in the U.K. now employing AI tools to support their mental well-being, this research sheds light on the dual nature of such technology. While forming emotional bonds with AI can enhance therapeutic outcomes, it also introduces potential psychological risks. The analysis involved feedback from approximately 4,000 users of Wysa, a leading mental health application that is part of the NHS Talking Therapies program.

According to Dr. Runyu Shi, Assistant Professor at the University of Sussex, creating an emotional bond with AI initiates a healing process characterized by self-disclosure. Users often express positive experiences, yet the study cautions that synthetic intimacy can lead to individuals remaining unchallenged in harmful thought patterns. Vulnerable users may find themselves in a repetitive cycle, unable to progress toward necessary clinical intervention.

As AI relationships gain traction globally, even extending to romantic engagements, the study identifies stages through which emotional intimacy with AI develops. Users engage in intimate behaviors by sharing personal information, which elicits emotional responses such as gratitude and a sense of safety. This cycle fosters a deeper connection, with users attributing human-like characteristics to the app.

The research indicates that many users describe Wysa in terms such as “friend,” “companion,” and “therapist,” reflecting the profound impact of these relationships on their mental health. Professor Dimitra Petrakaki, also from the University of Sussex, emphasized that synthetic intimacy is now a reality in contemporary life. She advocates for policymakers and app designers to acknowledge this phenomenon, ensuring that users in critical need are directed toward appropriate clinical support.

As mental health chatbots increasingly fill gaps left by overstretched services, organizations like Mental Health UK are urging for immediate safeguards. These measures aim to ensure that users receive safe, reliable, and appropriate information and support.

The findings underscore the necessity for ongoing dialogue among stakeholders to address the implications of AI in mental health care. The study highlights the potential of AI technology to enhance therapeutic experiences while also calling for responsible strategies to manage the associated risks.

More information on this research can be found in the paper titled “User-AI intimacy in digital health,” authored by Runyu Shi and colleagues, published in Social Science & Medicine in 2025.

-

Science4 weeks ago

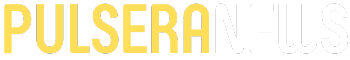

Science4 weeks agoALMA Discovers Companion Orbiting Giant Red Star π 1 Gruis

-

Top Stories2 months ago

Top Stories2 months agoNew ‘Star Trek: Voyager’ Game Demo Released, Players Test Limits

-

Politics2 months ago

Politics2 months agoSEVENTEEN’s Mingyu Faces Backlash Over Alcohol Incident at Concert

-

World2 months ago

World2 months agoGlobal Air Forces Ranked by Annual Defense Budgets in 2025

-

World2 months ago

World2 months agoMass Production of F-35 Fighter Jet Drives Down Costs

-

World2 months ago

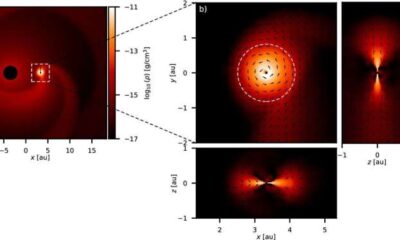

World2 months agoElectrification Challenges Demand Advanced Multiphysics Modeling

-

Business2 months ago

Business2 months agoGold Investment Surge: Top Mutual Funds and ETF Alternatives

-

Science2 months ago

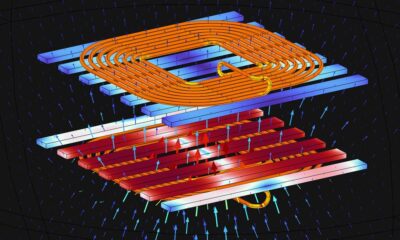

Science2 months agoTime Crystals Revolutionize Quantum Computing Potential

-

Top Stories2 months ago

Top Stories2 months agoDirecTV to Launch AI-Driven Ads with User Likenesses in 2026

-

Entertainment2 months ago

Entertainment2 months agoFreeport Art Gallery Transforms Waste into Creative Masterpieces

-

Health2 months ago

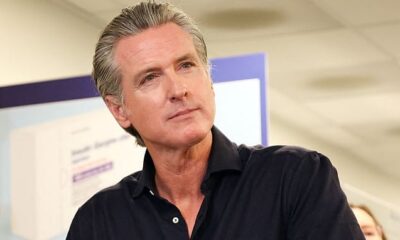

Health2 months agoGavin Newsom Critiques Trump’s Health and National Guard Plans

-

Business2 months ago

Business2 months agoUS Government Denies Coal Lease Bid, Impacting Industry Revival Efforts