Science

Researchers Uncover How AI Can Enhance Autonomous Vehicle Safety

The safety of autonomous vehicles has taken center stage as researchers explore how artificial intelligence can improve their reliability. In a study published in the October issue of IEEE Transactions on Intelligent Transportation Systems, a team from the University of Alberta emphasized the importance of using explainable AI to enhance decision-making transparency in these vehicles. As public trust hinges on the flawless performance of autonomous systems, understanding their decision-making processes has never been more critical.

Shahin Atakishiyev, a deep learning researcher involved in the study, pointed out that the architecture of autonomous driving systems often resembles a “black box.” Passengers and bystanders typically lack insight into how these vehicles make real-time driving decisions. “With rapidly advancing AI, it’s now possible to ask the models why they make the decisions they do,” Atakishiyev stated. This capability not only fosters trust but also aids in the development of safer vehicles.

Real-Time Feedback for Enhanced Safety

The research team provided compelling examples of how real-time feedback could help identify faulty decision-making. They referenced a case study where researchers altered a 35 mph (approximately 56 kph) speed limit sign with a sticker, causing a Tesla Model S to misinterpret the speed limit as 85 mph (around 137 kph). The vehicle accelerated towards the sign, illustrating a potential danger.

Atakishiyev highlighted that if the vehicle had communicated its rationale—such as indicating “The speed limit is 85 mph, accelerating”—passengers could have intervened to correct the course. He also noted the challenge of tailoring the level of information presented to passengers, as preferences may vary based on technical knowledge and cognitive abilities. Feedback could be delivered through various methods, including audio or visual cues.

While real-time interventions can prevent immediate hazards, analyzing decision-making failures post-incident can guide researchers in creating safer vehicles. The team conducted simulations where a deep learning model made various driving decisions while being questioned about its rationale. This approach revealed gaps in the model’s ability to explain its actions, highlighting areas needing improvement.

Assessing Decisions with SHAP Analysis

The study also explored the application of SHapley Additive exPlanations (SHAP) in understanding autonomous vehicle decision-making. After a vehicle completes a drive, SHAP analysis can score the features used in its decisions, revealing which factors significantly influence driving behavior. “This analysis helps to discard less influential features and pay more attention to the most salient ones,” Atakishiyev explained.

Moreover, the researchers discussed the potential legal implications of autonomous vehicle actions in accident scenarios. Key questions arise, such as whether the vehicle adhered to traffic regulations and if it appropriately responded after a collision. Understanding these dynamics is essential for identifying and correcting faults within the model.

As the field of autonomous vehicles evolves, the techniques outlined in this research are gaining traction and are likely to contribute to enhanced road safety. Atakishiyev emphasized, “Explanations are becoming an integral component of AV technology,” underscoring their role in evaluating operational safety and debugging existing systems.

The integration of explainable AI into autonomous vehicle technology not only aims to bolster public trust but also to pave the way for a safer future on the roads. As researchers continue to refine these systems, the collaboration between technology and transparency stands to reshape the landscape of autonomous driving.

-

Top Stories2 months ago

Top Stories2 months agoNew ‘Star Trek: Voyager’ Game Demo Released, Players Test Limits

-

World2 months ago

World2 months agoGlobal Air Forces Ranked by Annual Defense Budgets in 2025

-

Science2 weeks ago

Science2 weeks agoALMA Discovers Companion Orbiting Giant Red Star π 1 Gruis

-

World2 months ago

World2 months agoMass Production of F-35 Fighter Jet Drives Down Costs

-

World2 months ago

World2 months agoElectrification Challenges Demand Advanced Multiphysics Modeling

-

Business2 months ago

Business2 months agoGold Investment Surge: Top Mutual Funds and ETF Alternatives

-

Science2 months ago

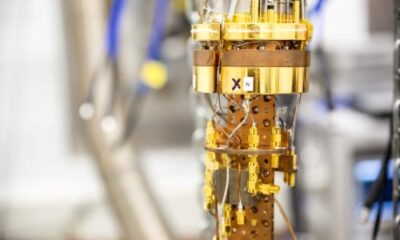

Science2 months agoTime Crystals Revolutionize Quantum Computing Potential

-

Top Stories2 months ago

Top Stories2 months agoDirecTV to Launch AI-Driven Ads with User Likenesses in 2026

-

Entertainment2 months ago

Entertainment2 months agoFreeport Art Gallery Transforms Waste into Creative Masterpieces

-

Business2 months ago

Business2 months agoUS Government Denies Coal Lease Bid, Impacting Industry Revival Efforts

-

Health2 months ago

Health2 months agoGavin Newsom Critiques Trump’s Health and National Guard Plans

-

Politics1 month ago

Politics1 month agoSEVENTEEN’s Mingyu Faces Backlash Over Alcohol Incident at Concert