Science

Unraveling Causation: The Challenge of Identifying True Links

Understanding whether specific exposures—such as medications, treatments, or policy interventions—lead to particular outcomes is a fundamental pursuit of research. This quest often raises critical questions: Does homework enhance academic performance? What are the root causes of crime? Can the use of acetaminophen during pregnancy increase the risk of autism? Answering these queries is vital for informed decision-making at both individual and societal levels. Yet, establishing causation remains a complex challenge.

Identifying correlations is relatively straightforward. A common saying highlights this distinction: correlation does not equal causation. For instance, ice cream sales and crime rates may rise together during summer months, but one does not cause the other. Instead, factors like warmer weather and school breaks may influence both trends. In some cases, a phenomenon known as “selection bias” complicates the analysis. Schools that assign more homework may also implement other policies that foster academic excellence. As a result, observed improvements in student performance may reflect these confounding factors rather than the impact of homework itself.

Challenges in Establishing Causation

To transition from correlation to causation, researchers often rely on randomized controlled trials (RCTs). By randomly assigning participants to receive an intervention or a control condition, researchers can isolate the effects of the exposure of interest. If a difference in outcomes emerges, it can be attributed to the exposure rather than preexisting differences among participants. Nevertheless, RCTs are not always feasible due to ethical or practical constraints. For example, it would be unethical to randomly assign pregnant women to take or forgo acetaminophen, given its widely recognized benefits.

In situations where RCTs cannot be conducted, researchers must devise innovative methodologies to analyze non-randomized data. This includes utilizing electronic health records and large-scale studies like the Nurses Health Study. These designs aim to minimize confounding by leveraging natural variations or adjusting for observed confounders as effectively as possible.

One method researchers employ involves creating or identifying induced randomness, often referred to as “randomized encouragement” or “instrumental variables” designs. For instance, researchers might encourage a group of participants to increase their intake of fruits and vegetables through coupons or targeted messaging. Similarly, they may evaluate how varying access to grocery stores influences health outcomes.

Another approach is the difference-in-differences or comparative interrupted time series designs, which exploit policy changes to compare groups before and after an intervention. These designs are particularly powerful when they include comparison groups that did not experience the policy change, allowing researchers to account for underlying trends unrelated to the intervention.

Embracing Diverse Research Designs

Comparison group designs, commonly found in cohort studies like the Nurses Health Study and the All Of Us cohort, strive to adjust for various characteristics that could introduce confounding. Techniques such as propensity score matching enable researchers to compare individuals who are similar across a wide range of factors, including medical history and family background.

The strongest research designs incorporate robustness assessments to evaluate how results might be influenced by unobserved confounding variables. A diverse array of randomized and non-randomized designs exists, each suited for specific contexts and research inquiries. It is crucial for researchers to familiarize themselves with these methodologies, allowing for a comprehensive toolkit to address complex causal questions.

The variety of study designs is beneficial, especially when addressing nuanced causal inquiries that may not lend themselves to a single ideal RCT. Often, researchers opt for a combination of studies, each with its strengths and limitations, to build a more complete picture of the evidence. This synthesis of findings benefits from input from various methodological and subject matter experts.

As noted by Cordelia Kwon, M.P.H., a Ph.D. student in health policy at Harvard University, and Elizabeth A. Stuart, Ph.D., professor and chair in the Department of Biostatistics at the Johns Hopkins Bloomberg School of Public Health, the journey to understand causation is ongoing. Determining the causes of conditions such as autism will require continued research across multiple disciplines, inevitably leading to more questions.

The pursuit of knowledge in this field demands a commitment to rigorous study while embracing the complexities inherent in causal analysis. Researchers must remain steadfast in their inquiries, striving for clarity while understanding that definitive answers may often elude them. This ongoing dialogue within the scientific community will gradually illuminate the intricate web of causes and effects that shape our understanding of health and behavior.

-

Science3 weeks ago

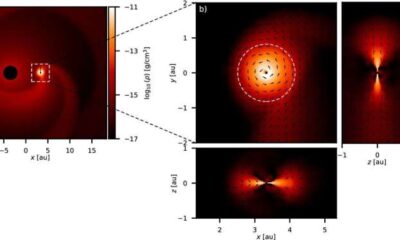

Science3 weeks agoALMA Discovers Companion Orbiting Giant Red Star π 1 Gruis

-

Top Stories2 months ago

Top Stories2 months agoNew ‘Star Trek: Voyager’ Game Demo Released, Players Test Limits

-

Politics2 months ago

Politics2 months agoSEVENTEEN’s Mingyu Faces Backlash Over Alcohol Incident at Concert

-

World2 months ago

World2 months agoGlobal Air Forces Ranked by Annual Defense Budgets in 2025

-

World2 months ago

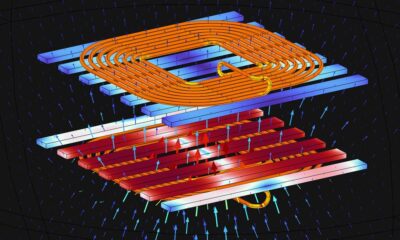

World2 months agoElectrification Challenges Demand Advanced Multiphysics Modeling

-

World2 months ago

World2 months agoMass Production of F-35 Fighter Jet Drives Down Costs

-

Science2 months ago

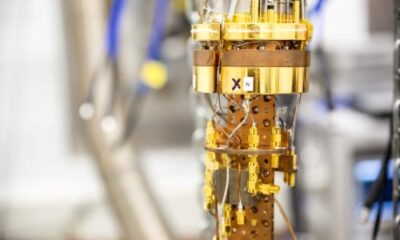

Science2 months agoTime Crystals Revolutionize Quantum Computing Potential

-

Business2 months ago

Business2 months agoGold Investment Surge: Top Mutual Funds and ETF Alternatives

-

Top Stories2 months ago

Top Stories2 months agoDirecTV to Launch AI-Driven Ads with User Likenesses in 2026

-

Entertainment2 months ago

Entertainment2 months agoFreeport Art Gallery Transforms Waste into Creative Masterpieces

-

Health2 months ago

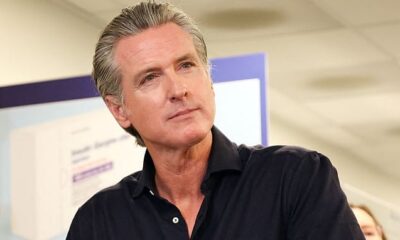

Health2 months agoGavin Newsom Critiques Trump’s Health and National Guard Plans

-

Lifestyle2 months ago

Lifestyle2 months agoDiscover Reese Witherspoon’s Chic Dining Room Style for Under $25