World

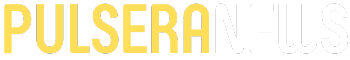

Social Media Faces Reckoning as Elon Musk Reinstates Trolls

Concerns surrounding social media’s role in political discourse have intensified following Elon Musk‘s controversial decisions since acquiring Twitter, now rebranded as X. After his purchase in 2022, Musk reinstated numerous accounts previously banned for hate speech and disinformation, including that of former President Donald Trump. This move has raised alarms about the resurgence of foreign influence and inauthentic behavior on the platform.

The roots of this issue can be traced back to findings from BuzzFeed News in 2014, which reported on the Internet Research Agency, a Russian entity that orchestrated a wide-ranging propaganda campaign across social media. Internal communications revealed strategies to manipulate American political discussions by creating fake accounts and inundating platforms like Facebook and Twitter with divisive content. Despite nearly two decades of awareness, debates over the extent of foreign trolling persist in the U.S., while fraudulent accounts continue to proliferate.

In a 2024 CNN analysis, a study of 56 pro-Trump accounts on X uncovered a “systematic pattern of inauthentic behavior.” Alarmingly, some of these accounts bore blue check marks, signifying official verification. Several were found to use stolen images of prominent European influencers to enhance their credibility. Under Musk’s leadership, X has dismantled many safeguards against the spread of misinformation, resulting in an environment where extremist propaganda can flourish.

The monetization strategy of X heavily relies on engagement, often fueled by outrage over cultural issues. This dynamic has proven effective in galvanizing support for particular policies, particularly during the second Trump administration. However, recent tensions among Trump’s supporters signal potential fractures within this coalition, as seen during an incident involving conservative commentator Charlie Kirk.

In response to growing concerns about foreign bots and their impact on American discourse, Nikita Bier, head of product at X, proposed a new feature aimed at revealing the geographical locations of users. Following a public appeal from Katie Pavlich of Fox News, Bier promised action within 72 hours. The subsequent rollout, while chaotic and error-prone, received praise from certain conservative figures, including Florida‘s Republican Governor Ron DeSantis.

However, the implementation of this feature raised significant concerns about user safety and privacy. Reports indicated that some top MAGA accounts were operating from Nigeria, leading to fears that journalists and activists could inadvertently expose themselves to danger. Critics highlighted that the lack of proper safeguards and accountability could result in serious consequences for individuals reporting on authoritarian regimes.

The implications of X’s new initiative extend beyond political figures to the journalistic community, which has seen a significant decline in engagement on the platform due to Musk’s controversial policies. While media professionals once flocked to Twitter for real-time news and commentary, the current environment marked by inauthentic accounts poses challenges to their safety and credibility.

Beyond X, other social media platforms are grappling with similar issues. Testimony from Vaishnavi Jayakumar, former head of safety at Instagram, revealed that accounts engaged in human sex trafficking could violate platform policies multiple times before facing suspension. This raises serious questions about the effectiveness of existing regulations and the commitment of these companies to user safety.

Internal studies conducted by Meta, the parent company of Instagram, indicated links between social media use and mental health issues, including increased anxiety and depression. A notable study, referred to internally as “Project Mercury,” found that users who deactivated their accounts for a week reported significant improvements in mental well-being. The findings highlight a troubling reality: despite possessing evidence of its products’ negative impact on public health, Meta appeared to prioritize revenue over user safety.

The central issue remains the inherent profit motive of social media platforms. Engagement, often driven by anger and controversy, is essential to their business models. While algorithms could be adjusted to mitigate harmful content, the lack of incentive to do so raises concerns about the platforms’ commitment to transparency and user safety.

There is a growing consensus that self-regulation is insufficient. The need for systemic regulatory reform is more pressing than ever. Social media platforms must be held accountable for their role in fostering an environment conducive to misinformation and manipulation. Without significant changes to their operational frameworks, the cycle of outrage and misinformation is unlikely to abate.

-

Top Stories2 months ago

Top Stories2 months agoNew ‘Star Trek: Voyager’ Game Demo Released, Players Test Limits

-

World2 months ago

World2 months agoGlobal Air Forces Ranked by Annual Defense Budgets in 2025

-

Science2 weeks ago

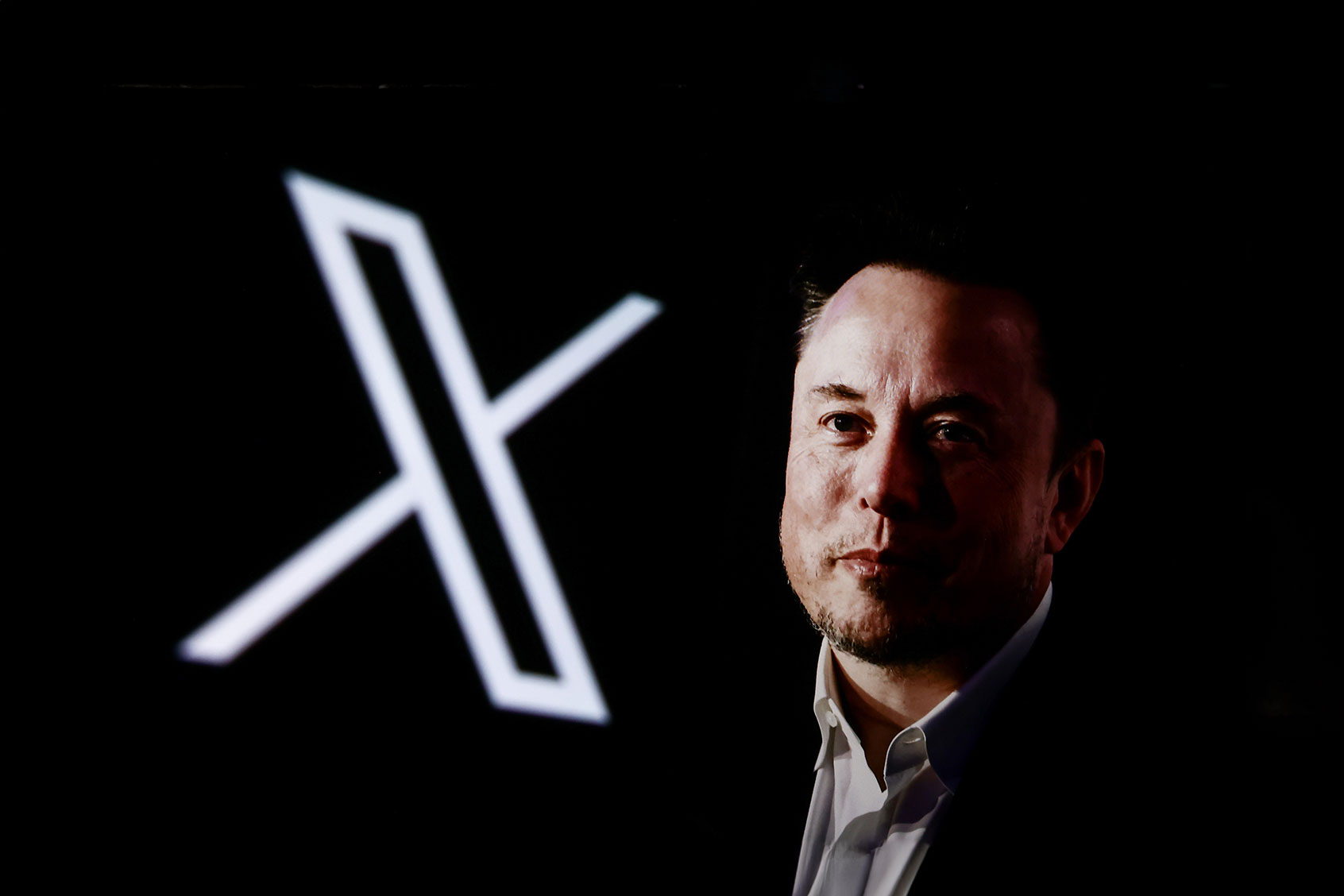

Science2 weeks agoALMA Discovers Companion Orbiting Giant Red Star π 1 Gruis

-

World2 months ago

World2 months agoMass Production of F-35 Fighter Jet Drives Down Costs

-

World2 months ago

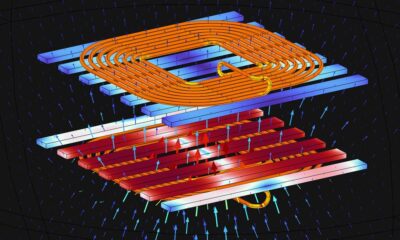

World2 months agoElectrification Challenges Demand Advanced Multiphysics Modeling

-

Business2 months ago

Business2 months agoGold Investment Surge: Top Mutual Funds and ETF Alternatives

-

Science2 months ago

Science2 months agoTime Crystals Revolutionize Quantum Computing Potential

-

Top Stories2 months ago

Top Stories2 months agoDirecTV to Launch AI-Driven Ads with User Likenesses in 2026

-

Entertainment2 months ago

Entertainment2 months agoFreeport Art Gallery Transforms Waste into Creative Masterpieces

-

Business2 months ago

Business2 months agoUS Government Denies Coal Lease Bid, Impacting Industry Revival Efforts

-

Health2 months ago

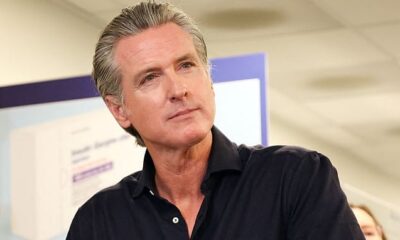

Health2 months agoGavin Newsom Critiques Trump’s Health and National Guard Plans

-

Politics1 month ago

Politics1 month agoSEVENTEEN’s Mingyu Faces Backlash Over Alcohol Incident at Concert